I updated the domain to match the headline https://RandomNeuronsFiring.com Lots of new content! See you over there!

SQLite Date and Time Functions – explained

A while back, I was greatly confused by SQLite date and time functions. It took me a while to figure out what was wrong. (It was my error: I hadn’t observed the rule that dates must have this form “YYYY-MM-DD” – four digit year, two-digit month and day.)

Nevertheless, I found that the documentation wasn’t quite clear, so I wrote up these notes as an adjunct to SQLite Datatypes and the SQLite Date and Time Functions pages.

2.2. Date and Time Datatype

SQLite does not have a storage class set aside for storing dates and/or times.

The conventional way to store dates is as a string in a TEXT field.

These fields can be compared directly (as strings) to determine equality or order.

For other date-as-string formats, see Date Strings on the Date And Time Functions page.

For further manipulations on dates and times, the built-in Date And Time Functions of SQLite convert dates and times between TEXT, REAL, or INTEGER values:

- TEXT as strings (“YYYY-MM-DD HH:MM:SS.SSS” – with leading zero where required, and four-digit year – a so-called “timestring”)

- REAL as Julian day numbers, the number of days (with fractional part) since noon in Greenwich on November 24, 4714 B.C. according to the proleptic Gregorian calendar.

- INTEGER as Unix Time, the number of seconds since 1970-01-01 00:00:00 UTC.

Applications can chose to store dates and times in any of these formats and freely convert between formats using the built-in date and time functions.

Date And Time Functions

The Date and Time Functions page doesn’t really define the the arguments or the return types, so I make them explicit below.

Timestring: The conventional way to store dates is as a timestring – a TEXT field (e.g., “YYYY-MM-DD HH:MM:SS.SSS”). These fields can be compared directly (as strings) to determine equality or order.

To convert to other date representations, SQLite supports five date and time functions. All take a timestring (a subset of IS0 8601 date and time formats, listed below) as an argument. The timestring is followed by zero or more modifiers. The strftime() function also takes a format string as its first argument.

- date(timestring, modifier, modifier, …) Returns the date as a string: “YYYY-MM-DD”.

- time(timestring, modifier, modifier, …) Returns the time as a string: “HH:MM:SS”.

- datetime(timestring, modifier, modifier, …) Returns a string: “YYYY-MM-DD HH:MM:SS”.

- julianday(timestring, modifier, modifier, …) Returns the Julian day as an REAL – the number of days (and fractional part) since noon in Greenwich on November 24, 4714 B.C. (Proleptic Gregorian calendar).

- strftime(format, timestring, modifier, modifier, …) Returns the date formatted according to the format string specified as the first argument. The format string supports the most common substitutions found in the strftime() function from the standard C library plus two new substitutions, %f and %J.

… see the original SQLite page for modifiers and legal timestring formats …

Examples

This section replicates the examples of the original page, but includes the results and types of the function.

Compute the current date. Returns timestring.

SELECT date('now'); -- Result: 2018-03-07

Compute the last day of the current month. Returns timestring.

SELECT date('now','start of month','+1 month','-1 day'); -- Result: 2018-03-31

Compute the date and time given a unix timestamp 1092941466. Returns timestring.

SELECT datetime(1092941466, 'unixepoch'); -- Result: 2004-08-19 18:51:06

Compute the date and time given a unix timestamp 1092941466, and compensate for your local timezone. Returns timestring.

SELECT datetime(1092941466, 'unixepoch', 'localtime'); -- Result: 2004-08-19 14:51:06

Compute the current unix timestamp. Returns INTEGER.

SELECT strftime('%s','now'); -- Result: 1520444198

Compute the number of days since the signing of the US Declaration of Independence. Returns REAL – days and fractions of a day.

SELECT julianday('now') - julianday('1776-07-04'); -- Result: 88269.7339379285

Compute the number of seconds since a particular moment in 2004: Returns INTEGER.

SELECT strftime('%s','now') - strftime('%s','2004-01-01 02:34:56'); -- Result: 447519729

Compute the date of the first Tuesday in October for the current year. Returns timestring.

SELECT date('now','start of year','+9 months','weekday 2'); -- Result: 2018-10-02

Compute the time since the unix epoch in seconds (like strftime(‘%s’,’now’) except includes fractional part). Returns REAL – days and fractions of a day.

SELECT (julianday('now') - 2440587.5)*86400.0; -- Result: 1520444280.01899

Local by Flywheel won’t start because it’s regenerating Docker Machine TLS certificates

I have been using Local by Flywheel and really enjoying it. It does two things:

- You can stand up a development version of a WordPress site on your laptop and horse around with it. It’s fast, you can make experiments, and if it blows up, you can simply regenerate in a minute or two.

- Using the (paid) Flywheel hosting, you can transfer your local dev server to their public hosting, and you’re on the air.

I have not used this latter facility, but I’m here to tell you that the first part is pretty slick.

But… I went away from Local by Flywheel for a month or so, then came back to start working on a new site. When I wanted to start it up, I saw a succession of messages stating that it was “Regenerating Machine Certificates” and that “Local detected invalid Docker Machine TLS certificate sand is fixing them now.” This looped apparently forever, and wouldn’t work. Here’s my report on their community forum.

After considerable searching, I found a procedure from one of the developers that seems to do the trick. It involves downloading a new version of the Boot2Docker ISO file, and letting the system re-provision itself. The process involved a) Creating an alias (“local-docker-machine”) for the “Local by Flywheel”s docker-machine binary; b) issuing a series of commands to that alias:

local-docker-machine stop local-by-flywheel

rm -rf ~/.docker/machine/certs

local-docker-machine create local-cert-gen

local-docker-machine start local-by-flywheel

local-docker-machine regenerate-certs -f local-by-flywheel

local-docker-machine rm -f local-cert-gen

These steps caused Local by Flywheel to recognize that the Boot2Docker ISO was out of date. It triggered a download of the new version, and gave the output below. When it completed Local by Flywheel worked as expected. Whew!

bash-3.2$ alias local-docker-machine="/Applications/Local\ by\ Flywheel.app/Contents/Resources/extraResources/virtual-machine/vendor/docker/osx/docker-machine"

bash-3.2$

bash-3.2$ local-docker-machine stop local-by-flywheel; rm -rf ~/.docker/machine/certs; local-docker-machine create local-cert-gen; local-docker-machine start local-by-flywheel; local-docker-machine regenerate-certs -f local-by-flywheel; local-docker-machine rm -f local-cert-gen;

Stopping "local-by-flywheel"...

Machine "local-by-flywheel" is already stopped.

Creating CA: /Users/richb/.docker/machine/certs/ca.pem

Creating client certificate: /Users/richb/.docker/machine/certs/cert.pem

Running pre-create checks...

(local-cert-gen) Default Boot2Docker ISO is out-of-date, downloading the latest release...

(local-cert-gen) Latest release for github.com/boot2docker/boot2docker is v18.09.1

(local-cert-gen) Downloading /Users/richb/.docker/machine/cache/boot2docker.iso from https://github.com/boot2docker/boot2docker/releases/download/v18.09.1/boot2docker.iso...

(local-cert-gen) 0%....10%....20%....30%....40%....50%....60%....70%....80%....90%....100%

Creating machine...

(local-cert-gen) Copying /Users/richb/.docker/machine/cache/boot2docker.iso to /Users/richb/.docker/machine/machines/local-cert-gen/boot2docker.iso...

(local-cert-gen) Creating VirtualBox VM...

(local-cert-gen) Creating SSH key...

(local-cert-gen) Starting the VM...

(local-cert-gen) Check network to re-create if needed...

(local-cert-gen) Waiting for an IP...

Waiting for machine to be running, this may take a few minutes...

Detecting operating system of created instance...

Waiting for SSH to be available...

Detecting the provisioner...

Provisioning with boot2docker...

Copying certs to the local machine directory...

Copying certs to the remote machine...

Setting Docker configuration on the remote daemon...

Checking connection to Docker...

Docker is up and running!

To see how to connect your Docker Client to the Docker Engine running on this virtual machine, run: /Applications/Local by Flywheel.app/Contents/Resources/extraResources/virtual-machine/vendor/docker/osx/docker-machine env local-cert-gen

Starting "local-by-flywheel"...

(local-by-flywheel) Check network to re-create if needed...

(local-by-flywheel) Waiting for an IP...

Machine "local-by-flywheel" was started.

Waiting for SSH to be available...

Detecting the provisioner...

Started machines may have new IP addresses. You may need to re-run the `docker-machine env` command.

Regenerating TLS certificates

Waiting for SSH to be available...

Detecting the provisioner...

Copying certs to the local machine directory...

Copying certs to the remote machine...

Setting Docker configuration on the remote daemon...

About to remove local-cert-gen

WARNING: This action will delete both local reference and remote instance.

Successfully removed local-cert-gen

Internet Identity, Nationwide Bank, and the Post Office

Dave Winer wrote about “internet identity” and that several companies were probably thinking about solving the problem. Specifically, he said:

But because money is so central to identity, it’s surprising that there isn’t a Google or Amazon of identity. Seems there’s money to be made here. An organization with physical branches everywhere, with people in them who can help with indentity (sic) problems.

This reminded me of the proposal to have US Post Offices become banks (for example, here and a zillion other places.)

The advantages:

- There are post offices everywhere. The postal system is constitutionally mandated to be present, so it’s useful for them to have a valuable mission even as the volume of paper mail declines.

- The “Bank of the US Post Office” could provide an ATM at each branch. You could withdraw cash without fees anywhere in the US.

- They could provide a low cost (no cost?) saving/checking accounts for the traditionally “unbanked”, instead making people use check cashing services, payday lenders, etc. who siphon off a percentage of the transaction.

- Postal employees have a strong ethos of caring for the transactions, and already have procedures for handling cash.

- Post Offices are accustomed to handling critical, private matters in a timely way.

Identity management seems another valuable service that the USPS might provide.

Linking Reservation Nexus and TripAdvisor

Connecting ResNexus and TripAdvisor

We wanted our room availability to show up in TripAdvisor and other online services. There are two basic steps, where you tell Reservation Nexus and TripAdvisor how to find each other’s information:

- Use Reservation Nexus Availability Exchange to share your room availability

- Use TripAdvisor TripConnect to link up your business to the Reservation Nexus listings

Note: The business name, postal address, URL, and email must be exactly the same in both ResNexus and TriPAdvisor. Check them before starting this procedure:

On the Reservation Nexus site:

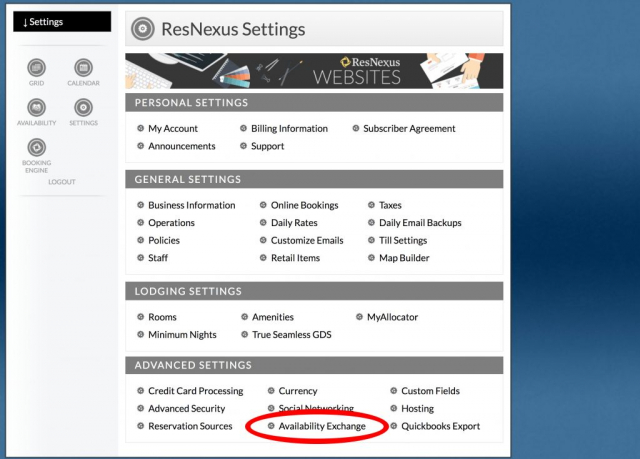

- In the ResNexus Settings choose Availability Exchange, near the bottom of the settings (first image below).

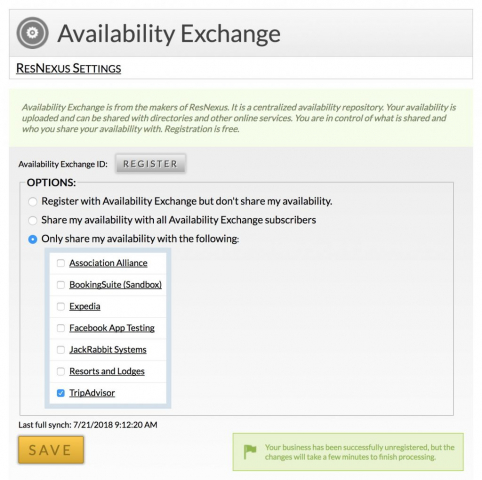

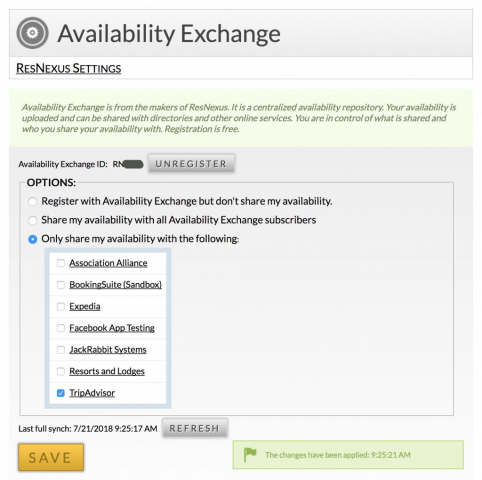

- In the Availability Exchange page:

- Click the REGISTER button to register your rooms

- Click Only share my availability… and check off the desired services. (second image)

- Click SAVE. The resulting page (third image below) shows:

- Your Availability Exchange ID next to the UNREGISTER button

- The Last full synch time

On your TripAdvisor site:

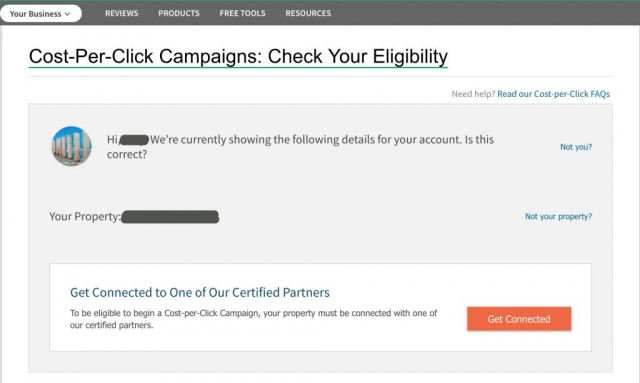

- Log into TripAdvisor

- Go to https://www.tripadvisor.com/CostPerClick and click Check your Eligibility. It will show a page naming your property to link to the Cost per Click program. (first image below)

- Click Get Connected. You will see a page listing the choices. (second image)

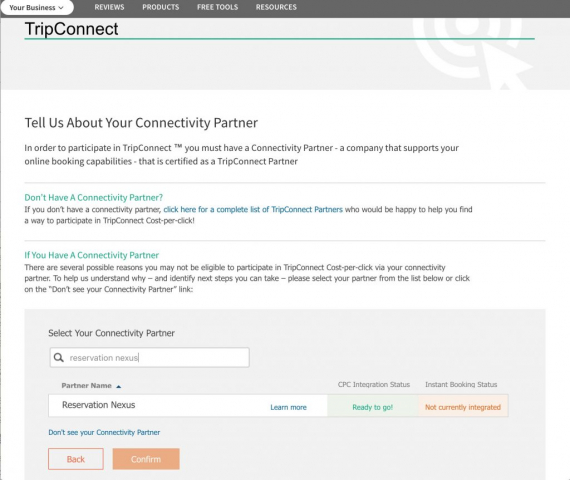

- Find “Reservation Nexus” and click it to select, it, then click Confirm. (third image)

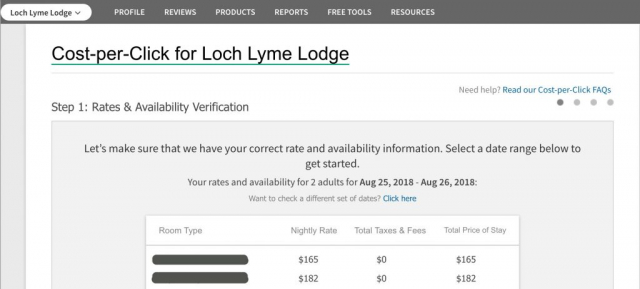

- The confirmation page (fourth image below) should show property prices for a specific night. This confirms that the connection has been established. Continue with the cost-per-click process with TripConnect.

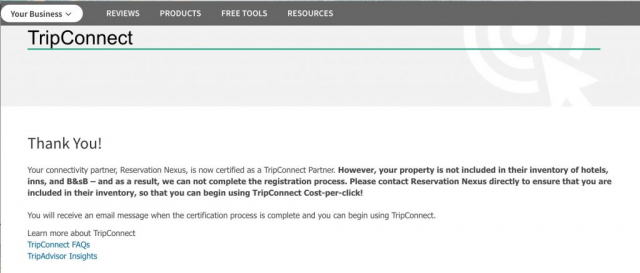

- If you see an error (fifth image), ensure that your contact information for Reservation Nexus and TripAdvisor are exactly the same.

Troubleshooting

- When it works, the connection between Reservation Nexus and TripAdvisor should happen almost immediately, and you should see the confirmation page listing your property prices.

- If you had to modify your ResNexus info, then you may need to contact ResNexus to have them re-publish your TripConnect info.

- Contact Reservation Nexus if the connection has not completed within an hour.

Taxpayer-Funded Networks – all that bad?

I saw an article fretting about taxpayer-funded broadband projects in Texas Monitor. It cites a “study” by the Taxpayer Protection Alliance Foundation that purports to show a wide swath of “failed taxpayer-funded networks”.

A little research on the site led me to realize that it’s not first-rate work – outdated, incorrect information – so I left the following comment on the Texas Monitor site:

I decided to check the “Broadband Boondoggles” site to see what information they provide. First off, the copyright date on the site’s footer says 2017 – are they even updating it?

More specifically, I found that they disparage the local ECFiber.net project (in VT) of which I have personal knowledge. They state that as of January 2015 ECFiber has spent $9M to connect 1,200 subscribers (“an astounding $7,500 per customer.”)

Well, that may be true – as of that date. If they had bothered to follow up with ECFiber’s progress (https://www.ecfiber.net/history/) they would have learned:

- As of January 2018 they have connected over 2000 customers (cost per subscriber is now roughly half that reported number)

- They’re hampered by the pole “make ready” process by the incumbent monopoly carriers who are slow to respond. They could connect subscribers faster if the carriers would follow their legal make-ready obligations.

- ECFiber is a private community effort, entirely funded with grants and private equity/loans, so I’m curious how they could even have filed a FOIA request.

- They’ve now raised $23M capital (from the private markets), to reach 20,000 subscribers.

- This gives a system-wide average cost of $1,150/subscriber – a very attractive cost.

I’m sure there are false starts and overruns for many municipal projects, but if this outdated information is typical of the remainder of the TPAF site, then I would be reluctant to accept any of its conclusions without doing my own research.

WordPress Meetup in Londonderry

I’ll be speaking next month at the WordPress Meetup about the using Docker to host a development WP server on your laptop. Here’s the writeup:

Docker for WordPress

Docker enables developers to easily pack, ship, and run any application (including WordPress) as a lightweight, self-sufficient container which can run virtually anywhere.

For WordPress users, this means it’s easy to set up a lightweight development WP server on your laptop/desktop. You can make and test changes before migrating it to your client’s site. Best of all, if you screw things up, you can simply discard the container’s files and start afresh in a couple minutes. And because it’s running on your local computer, no need to worry about hosting, configuring servers, etc.

Rich will show how to install the Docker application on a laptop, then install and start a WordPress Docker container. The result is the familiar new WP install that you can customize to your heart’s (or client’s) content.

The WordPress Meetup is open to all on Tuesday, 8 May. Sign up at https://www.meetup.com/WordPressDevNH/events/249032144/

Fake News News

I went to a terrific talk at the Lyme Library earlier this week.

Randall Mikkelsen from Reuters spoke on the topic, “Fake News: What’s the Real Story?”. In it, he presented The Chart which is an analysis of popular web sites showing their bias (left, center, right) with a measure of their reliability/believability. It’s useful to check your reading habits to see if they match your expectations.

That site also has Six Flags to Identify a Conspiracy Theory Article. This is an easy way to check your reading matter to see if it’s “actual news” or just somebody writing to get you fired up. (I also included a comment – what do you think?)

How to Write Wiki Pages So People Will Read Them

So you’ve just learned something cool on a new subject, and you want to let the world know about your discovery. You go to the project’s wiki, and jot it all down. But how can you help people read what you’ve written?

When I look at pages on a wiki, I use three criteria to determine whether I want to spend the time to read a page. If I’m convinced that the page has the info I’m seeking, I’ll work hard to understand it. But if I can’t tell whether it’s any good, it’s just faster to post a query to the forum. Here are the questions I ask:

- Is this page for me? Does it apply to my situation?

There are a lot of cues to whether a page “is for me”. Obviously the title/heading of the page is important. But when I’m seeking information, I’m not usually an expert in the subject. I need help to understand the topic, and I look for a description that tells what the page is about. I also look for cues to see if it’s up to date. Finally, I love a page that has an introductory section that talks about the kinds of info that I’ll find on the page.

-

Does the author know more than I do?

A number of factors influence this judgement. As you’re aware, there’s a huge range of knowledge level of wiki page authors – from expert to the newcomer who’s excited to document his first discovery. As I scan through a page, I’m looking for facts that confirm what I already know (proving the author has some skill), and then things that I don’t (showing they know more.) Finally, it helps to know that the author is aware of the conventions of the wiki – does it look like other wiki pages? If so, I get some comfort that the author is aware of the way other wiki pages work/look.

-

Can I figure out what to do?

My final question about whether a page is useful is whether I can use the information. If it’s a tutorial/howto, I want the steps clearly stated – “step 1, step 2, step 3, then you’re done” If it’s a reference page, is the information organized in a comprehensible fashion? Is it really long? Can I pick out what’s important from incidental info?

The challenge I put to every author is to organize the information in a way that presents the most frequently-sought info first, then figure out what to do with the rest. You might move sections around, or move some information onto its own separate page, coalesce it into an existing/similar wiki pages, or even create forum articles (instead of a wiki page) if the subject is rapidly evolving.

Typical Net Neutrality coverage – accepting untruths from ISPs

I just sent an email to the reporter from NewsPressNow who posted a typical net neutrality story. A flaw in this kind of reporting is the tacit acceptance of an ISP’s blandishments that the Internet was fine before the 2015 FCC rules, and that “… And I don’t know if you’d find anyone who said there was a problem with the internet.”

Well, someone said there was a problem, because Comcast paid a $16 million fine to settle a law suit for blocking/throttling legal internet traffic, exactly the kind of behavior that would be permitted by the change of rules. As I said in my note to the reporter:

I don’t know whether he [the source at the ISP] is ignorant of history, or simply baldly saying things that are known to be false, but a quick google of “Comcast throttle bittorrent” will turn up copious evidence that some ISPs were throttling the internet in those “good old days”. See, for example, these two articles that offer technical details of the Comcast case:

Wired: https://www.wired.com/2007/11/comcast-sued-ov/ and

ArsTechnica: https://arstechnica.com/tech-policy/2009/12/comcast-throws-16-million-at-p2p-throttling-settlement/

This behavior by Comcast is the best documented, but I believe more research turn up more ISPs who dabbled in various kinds of throttling behaviors before the Title II language went into effect.

I encouraged the reporter to update the story with a reaction to this information from his source at the ISP.